The future of AI-powered censorship is here, and the early returns are as error-filled and clumsily destructive as Google’s infamous Gemini rollout.

On March 4th, Yves Smith–nom de plume for the editor of Naked Capitalism, a popular site containing economics commentary and journalism–received an ominous letter from its ad service company:

Hope you are doing well! We noticed that Google has flagged your site for Policy violation and ad serving is restricted on most of the pages with the below strikes…

The letter went on to list four data fields: VIOLENT_EXTREMISM, HATEFUL_CONTENT, HARMFUL_HEALTH_CLAIMS, and ANTI_VACCINATION. From there the firm explained:

If Google identifies the flags consistently and if the content is not fixed, then the ads will be disabled completely to serve on the site.

“So this is a threat of complete demonetization,” says Smith.

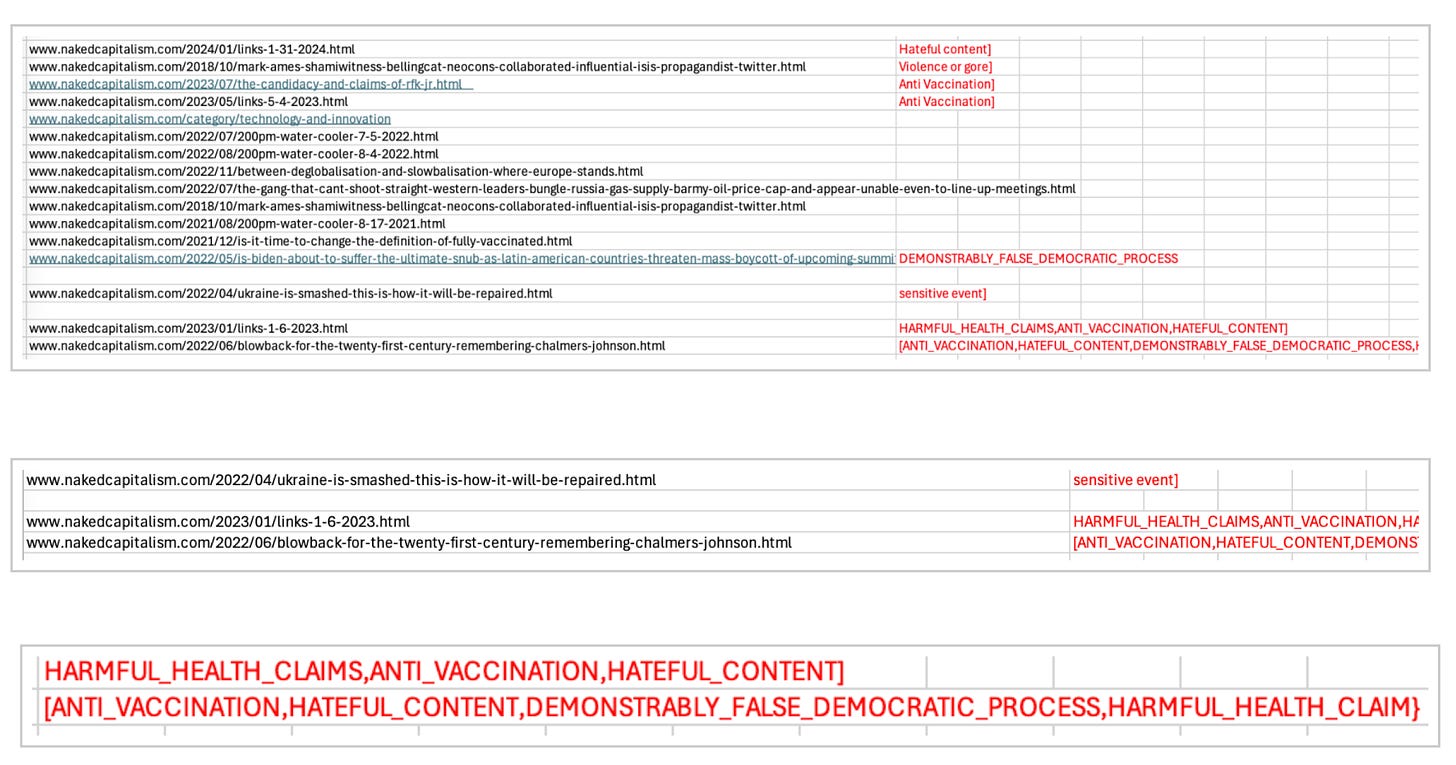

She discovered a spreadsheet listing her offenses. Excerpts are reprinted below. The third image is the enhanced view of Google’s explanation for the last two entries:

Naked Capitalism is run by Smith, a Harvard Business School graduate and longtime financial services sector expert. The site often tackles issues the financial press avoids and gained renown in the wake of the 2008 crash. (I appeared with Yves on a Moyers and Company show about banking years ago.) Naked Capitalism helped force the resignation of the SEC’s Director of the Office of Compliance and Inspections, Andrew Bowden when it described Bowden telling a Stanford audience containing many private equity executives how his son would like to work in private equity. Later, California Pension Fund chief Ben Meng resigned in the wake of the site’s reporting.

Naked Capitalism is a home for smart, independent commentary about a financial services industry that is otherwise almost exclusively covered by writers and broadcasters who’d jump at a job offer from the companies they cover. It’s unique, useful and full of links and primary source material. What 16 items did Google find objectionable in its archive of 33,000 posts?

- A Barnard College professor, Rajiv Sethi, evaluated Robert F. Kennedy’s candidacy and wrote, “The claim… is not that the vaccine is ineffective in preventing death from COVID-19, but that these reduced risks are outweighed by an increased risk of death from other factors. I believe that the claim is false (for reasons discussed below), but it is not outrageous.” This earned the judgment, “[AntiVaccination].” Sethi wrote his own explanation here, but this is a common feature of moderation machines; they can’t distinguish between advocacy and criticism.

- A link to “Evaluation of Waning of SARS-CoV-2 Vaccine—Induced Immunity” a peer-reviewed article by the Journal of the American Medical Association, was deemed “[AntiVaccination].”

- An entry critical of vaccine mandates, which linked to the American Journal of Public Health article SARS-CoV-2 Infection, Hospitalization, and Death in Vaccinated and Infected Individuals by Age Groups in Indiana, 2021‒2022, earned [HARMFUL_HEALTH_CLAIMS, ANTI_VACCINATION, HATEFUL_CONTENT] tag.

In this context, Engelhardt wrote that “it’s hard not to imagine The Donald’s success as another version of blowback.” He added,

given his insistence that the 2020 election was ‘fake’ or ‘rigged’… it seems to me that we could redub him Blowback Donald.

This entry earned the absurd DEMONSTRABLY_ FALSE_ DEMOCRATIC PROCESS judgment. Obviously, its machine was unable to distinguish commentary, even overtly negative commentary, from endorsement. “An AI could have read it as Engelhardt saying that the election was stolen, as opposed to Englehardt saying Trump said the election was stolen,” is how Smith put it.

There was some slightly more hardcore content on Google’s list–I can see how a machine might trip over a Gaza-themed spoof of the Hall and Oates song “You Make My Dreams Come True”–but algorithms appeared mostly to be going ballistic over throwaway lines, or misreading criticism as its opposite. Also, the company only offered explanations for 8 of 16 flags (really 14, as the company sent the same entry twice in one place, then listed the site’s “Technology and Innovation” category page as objectionable in another). For a site like Naked Capitalism that includes many links in each post, and posts a lot, even one non-specific complaint raises all sorts of issues.

“Every day on weekdays we have six posts, and on weekends we have three,” Smith says.

So we post religiously in fairly large numbers… Anything I could do could conceivably trigger demonetization. I mean, they say ‘consistently,’ but what does ‘consistently’ even mean?

In the last years Racket readers have become acquainted with a variety of “Meet the Censored” subjects, whose usual problem was removal or deamplification after a questionable judgment by an algorithmic reviewer. Naked Capitalism is dealing with the futuristic next step.

Technologists are in love with new AI tools, but they don’t always know how they work. Machines may be given a review task and access to data, but how the task is achieved is sometimes mysterious. In the case of Naked Capitalism, a site where even comments are monitored in an effort to pre-empt exactly these sorts of accusations, it’s only occasionally clear how or why Google came to tie certain content to categories like “Violent Extremism.” Worse, the company may be tasking its review bots with politically charged instructions even in the absence of complaints from advertisers.

Companies (and governments) have learned that the best way to control content is by attacking revenue sources, either through NewsGuard- or GDI-style “nutrition” or “dynamic exclusion” lists, or advertiser boycotts. Previously, a human being was sometimes involved in reviewing the final placement of sites in certain buckets, or problems might at least have been prompted by human complaints. Now, from flagging all the way through to the inevitable “Hi! You’ve been selected for income termination!” form letter, the new review process can be conducted without any human involvement at all.

“No human is flagging these posts,” Smith quoted an ad sales expert as saying.

I’m pretty sure advertisers have no idea that the Google bot is blocking their carefully crafted communications from reaching [her] readers.

Whether it’s AI or just an algorithm, it’s not clear whether Google is knocking Smith’s site on its own initiative, or to stay in compliance with a law like Europe’s Digital Services Act, since the company hasn’t responded to questions. The timing is odd; the site has never faced any kind of review before, and the breakdown of its supposed strikes suggests that even Google’s internal review process isn’t finding many recent issues. (Just four in the last two years.) Either way, the decision to even once tell a media outlet that it must remove content to remain ad-eligible sends a message someone like Smith will think of every time she publishes going forward. And think she will, because like most sites of this size, Naked Capitalism can’t afford to forego even a small amount of revenue.

“We are so lean,” Smith says.

We have nowhere to cut.