The tech industry’s emerging role as a defense contractor is transforming the global battlefield in ways that frighten and mesmerize. We’re now seeing how data-driven technology is shaping modern warfare, though it’s hard to fully fathom the level of death and destruction that is in store.

One of the most chilling examples of Silicon Valley’s military advance is Israel’s use of Amazon and Google cloud services and AI technology to store surveillance information on Gaza’s population, as part of a contract called Project Nimbus. The technology is helping Israel process and analyze vast amounts of data on Palestinians, including facial recognition and demographic information. The AI tools have been reportedly used to monitor and force Palestinians off their land, and in some cases, have enabled Israel’s military to carry out aerial assassinations that have killed and injured scores of civilians.

In Ukraine, the battlefield has turned into a laboratory for the latest military technologies. Palantir, a data-analytics company with close ties to the American intelligence community, uses AI tools to analyze satellite imagery, open-source data, drone footage, and oversee most of Ukraine’s military targeting efforts, in particular against Russian tanks and artillery. While Palantir has been dubbed the AI arms dealer of the 21st century, a host of other tech firms have descended onto Ukraine. These companies are selling weapons systems and collecting a trove of data about how battles are fought and how people and machines react under fire. The data itself is a crucial resource and a windfall for companies, because AI systems need data for fuel—they need to be fed with large amounts of images from complex environments in order to operate.

The Pentagon currently has more than 800 active military AI projects in the works (that we know of), in addition to autonomous weapons systems that are being developed and tested in secret. Washington’s rapid development of intelligent weapons is largely aimed at countering the global ambitions of China. Michèle Flournoy, a U.S. defense advisor who worked in the office of the Secretary of Defense under Bill Clinton and Barack Obama, says that AI development and new operational concepts on the battlefield should be accelerated. Flournoy has close ties to defense companies through the consulting firm WestExec Advisors, which she co-founded with current Secretary of State Antony Blinken. Having also served on the board of defense contractor Booz Allen Hamilton, Flournoy is seen as a polarizing figure who pushed for NATO intervention in Libya and helped escalate U.S. military involvement in Afghanistan. Tellingly, she is considered a frontrunner for the job of defense secretary if Kamala Harris becomes president. In a recent interview, Flournoy said an AI arms race is already underway in China and among U.S. companies, and noted that Washington, unlike Moscow and Beijing, has agreed to abide by international rules to ensure that this powerful technology would be used responsibly.

But events on the ground refute Flournoy’s rhetoric. Denver-based Palantir is a major provider of AI tools and “battle tech” for Israel’s operations in Gaza. The Israeli army is using autonomous combat drones—remote-controlled quadcopters—in densely populated civilian areas. These drones, manufactured by the Israeli company Elbit Systems, have attacked hundreds of civilians, killing and injuring dozens at a time. Witnesses said the drones opened fire on hundreds of people who had lined up for the arrival of food aid in January, 2024 near Gaza City’s coast, on Al-Rasheed Street. Elbit Systems has weapons factories in New Hampshire and Texas, and in Leicestershire, in the UK.

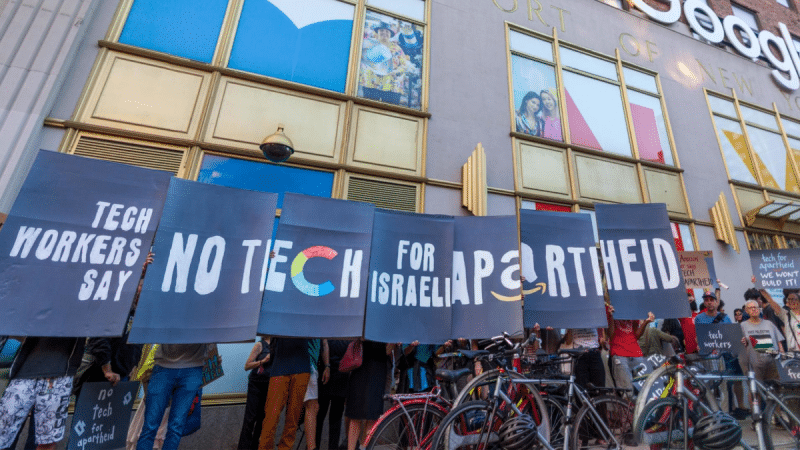

Workers protest outside Google’s New York City office on September 8, 2022. (Photo: NoTechForApartheid)

Meanwhile, two of Israel’s leading state-owned arms manufacturers are required to use Amazon and Google for cloud computing needs, as part of Project Nimbus. One of these Israeli companies, Rafael Advanced Defense Systems, provides the military with missiles, drones and other weapons systems. Rafael manufacturers a ‘Spike’ line of missiles that can create a lethal spray of metal, causing tissue to be torn from the flesh. Military analysts said that Spike missiles were likely used in the April 1 drone killing of seven aid workers working for World Central Kitchen. Rafael has an American subsidiary which provides Spike missiles for the U.S. army. These weapons are not new; Human Rights Watch documented evidence of the Israeli army using the missiles in 2009 against civilians in Gaza. When detonated, they spread hundreds of pieces of cubic tungsten fragments, providing a “killing power” that literally shreds their targets while puncturing thin metal and cinder block.

By the company’s own admission, these missiles have a “high hit and kill probability,” which seems to be the point. It is no mistake that these lethal autonomous weapons systems are killing innocent people and obliterating civilian infrastructure. The relentless push for precision-driven weapons and AI-powered wars is inexorable, and it’s driven in large part by greed with little or no regard for the sanctity of life. Public discussion around the unholy alliance among tech start-ups, arms companies and governments is abysmal. Many experts and journalists have expressed concern over the rapid acquisition of cheap drones by non-state actors such as ISIS terrorists or Iranian-backed insurgents, yet believe that autonomous weapons will help Washington and its allies project their hard power abroad, as though this policy will make the world a safer place. Progressive academics fear that the private sector, instead of government, is setting the regulatory agenda, as though the rule-makers in the security sector have suddenly been captured. These academics seem to have forgotten that U.S. President Dwight D. Eisenhower coined the expression, “military-industrial complex,” in his farewell address in 1961. The relationship between private and public actors has always been seamless.

It comes as no surprise that there is a disturbing lack of transparency about the ultimate rationale behind developing lethal autonomous weapons systems and the nature of new technology in the pipeline. There’s also very little debate on how, in real terms, society might manage this escalation in order to avoid risking a global catastrophe. In September, the U.S. Defense Advanced Research Projects Agency (DARPA) announced a new “trilateral project” to integrate its defense research with the British Defence Science and Technology Laboratory (Dstl) and Defence Research and Development Canada (DRDC), the science and technology arm of the Department of National Defence. The project ostensibly involves research and development in AI, cyber, resilient systems, and information domain-related technologies. But the essence and scope of this project remain opaque, likely for good reason.

We should not blindly accept what tech companies and their benefactors in government and the weapons industry impose, nor should we fuel AI-enabled wars as consumers. We need to scrutinize this paradigm shift and, at the very least, we need to understand how these technologies endanger human life and may ultimately defy human nature as we know it.

Judi Rever is a journalist from Montréal and is the author of In Praise of Blood: The Crimes of the Rwandan Patriotic Front.