We keep hearing that Israel’s genocide in Gaza is “AI-powered.” Many pundits warn that this marks a new era in warfare, the first time that “automated” war has been waged. Foreign Policy declares that “AI Decides Who Lives and Dies.” Vox reports that “AI tells Israel who to bomb.” The Washington Post claims “Israel offers a glimpse into the terrifying world of military AI.”

These narratives are misleading, by design. They distract from the institutions and ideologies responsible for the devastation, and from the sheer scale of the devastation itself. Like other appeals to “artificial intelligence”—a confused and confusing term that is inseparable from racist ideologies—these framings invite imperialist experts to offer their non-solutions. Rather than fight the entities responsible for genocide and support the Palestinian resistance, these experts want us to blame the genocide on “misuses” of computers—misuses they promise to “fix” through their partnerships with the US government, corporations, and academia (the institutions that have enabled the genocide in the first place).

“AI-Powered” Bombings: A Story Approved by the Israeli Military Censor

The now common narrative about an “AI-powered genocide” in Gaza is largely based on articles by Yuval Abraham, an Israeli journalist and activist working for +972. Abraham is famous for co-directing the documentary film No Other Land, about Israel’s efforts to destroy Masafer Yatta and the inspiring resistance by the residents, which recently won an Oscar. Abraham’s co-director, Hamdan Ballal —who could easily have told the story with the film’s other Palestinian co-director, but could not have gotten to the Academy Awards without Israeli support—was kidnapped and beaten by Israeli settlers and the army in March 2025. The publicity following the Oscar win also led to justifiable criticism of Abraham’s actions, including his circulation of Israeli atrocity propaganda concerning October 7 and his use of the Oscar acceptance speech to condemn Hamas and draw a false equivalence between Al-Aqsa Flood and Israel’s genocidal war.

Over the past year, Abraham has written several articles that have been used to support the idea of an “AI-powered genocide,” even though Abraham himself, tellingly, never uses the term “genocide.” Some of these articles were published in a collaboration between +972 and the Guardian, and have been widely quoted by pro-Palestinian activists and news outlets. Middle East Monitor, for instance, referred to “Israel’s AI-powered genocide” based on this reporting.

Since +972 is an Israeli outlet, they are subject to the Israeli military censor. Any story concerning “sensitive” matters must first be approved by the censor to ensure it doesn’t compromise the Zionist entity’s “security” interests. And if we read Abraham’s articles carefully, it’s not surprising that they passed, because they minimize the genocide, transfer blame from people to machines, and (whether intentionally or not) promote Israeli technologies.

What’s the Difference Between “AI Targeting” and Just Bombing Everything?

Abraham’s widely cited article on a system called Lavender (published April 3, 2024) begins by quoting a book written by the Israeli military. The book claims that there is a “bottleneck” in generating targets (humans are apparently too slow) and suggests that “AI” will solve this problem, thereby revolutionizing warfare. Rather than challenge the premise that the Israeli military has a sophisticated method of “generating targets”—which plays into Israel’s narrative that it uses “precision targeting”—Abraham concludes that the revolutionary technology the Israeli military dreamed of now exists: it is Lavender.

Abraham’s sources are Israeli soldiers who convinced him that Lavender “played a central role in the unprecedented bombing of Palestinians.” Abraham adds that his anonymous sources told him Lavender’s “influence on the military’s operations was such that they essentially treated the outputs of the AI machine ‘as if it were a human decision.’”

In fact, all decisions made over the course of this genocide are “human decisions.” Even if a computer was used in the process of enacting genocide, it is people who decided to use the computer, designed the software, chose the data, determined what constitutes a valid “output” and how that output will factor into the ultimately human decision to kill. Machines don’t pull triggers, people do. While AI pundits debate absurd scenarios where machines wake up and decide to turn everything into paperclips, machines on their own are nothing. In the real world, we have material systems and institutions in which human beings make political and moral decisions. No machine is autonomous; everything depends on resources allocated by people, and anything can be unplugged.

It was estimated that as of January 2025, over 70 percent of structures in Gaza have been damaged or destroyed. Israel has dropped over 85,000 metric tons of bombs on Gaza since October 2023 (not to mention the engineered starvation and lack of medical supplies). Basically everything has been bombed, much of it more than once, as we know from accounts by the people of Gaza and from satellite images.

Figure showing Israel’s systematic bombing of all civilian infrastructure in Gaza, using satellite images. (Source: “‘Nowhere and no one is safe’: spatial analysis of damage to critical civilian infrastructure in the Gaza Strip during the first phase of the Israeli military campaign, 7 October to 22 November 2023,” Conflict and Health, 2024).

Why would “target generation,” regardless of how it works, matter when the Zionist entity decided to bomb all of Gaza—to try to send it “back to the stone age,” as Israel’s craven politicians have openly said for years?

Israel bombed the areas where people lived, and then the areas where people fled for shelter and food. It has intentionally attacked all life-giving facilities. By October 26, 2023, Israel had bombed over thirty-five health care facilities, including twenty hospitals. As Mary Turfah wrote then, this was a clear case of “the logic of elimination,” where “the colonizer destroys the medical infrastructure to diminish a people’s ability to live, after they try and cannot crush the people’s will.” By now, several hospitals have been bombed multiple times. Israel aimed to destroy everything that was good for life in Gaza, from mosques to bakeries to water and sewage facilities (85 percent of such facilities were destroyed by early October 2024).

What does target generation even mean, then, when the settler-colonial policy is one of elimination? And since Israel has been bombing everything, why so much hype for stories about AI-based targeting? The focus on target generation minimizes the genocidal intent, painting the perpetrators as victims of sorts, pawns of the machine. Abraham writes,

The [Israeli] army gave sweeping approval for officers to adopt Lavender’s kill lists, with no requirement to thoroughly check why the machine made those choices or to examine the raw intelligence data on which they were based. One source stated that human personnel often served only as a “rubber stamp” for the machine’s decisions.… This was despite knowing that the system makes what are regarded as “errors” in approximately 10 percent of cases, and is known to occasionally mark individuals who have merely a loose connection to militant groups, or no connection at all.

But again, these are not “the machine’s decisions,” and Israel has never needed “AI” to indiscriminately bomb Palestinians. Destruction of entire areas is a long-running Zionist policy. Saying these attacks are primarily targeted at “militants,” with some deviations due to technical “errors,” sanitizes Israel’s genocidal nature. It also elevates “AI,” treating it as a revolutionary new technology with a mind of its own.

In getting trapped in the logic of target generation, Abraham also affirms the propaganda that the Israeli military otherwise abides by certain “ethics.” The Israeli military is infamous for appointing its own philosophers to develop an “Army Code of Ethics,” as well as lawyers who ensure adherence to international law. According to Israel, these experts decide what is an acceptable amount of “collateral damage.”

Abraham writes that “in an unprecedented move,” the Israeli military decided recently that “for every junior Hamas operative that Lavender marked, it was permissible to kill up to 15 or 20 civilians; in the past, the military did not authorize any ‘collateral damage’ during assassinations of low-ranking militants.” Abraham added that “in the event that the target was a senior Hamas official with the rank of battalion or brigade commander, the army on several occasions authorized the killing of more than 100 civilians in the assassination of a single commander.”

This framing makes it seem like the policy isn’t one of elimination, but rather that the Israeli military simply moved its “ethical” threshold from something acceptable (around fifteen civilians for every target) to something that seems extreme even by liberal Zionist standards (around a hundred civilians for every target). This reporting never challenges the targeting of the resistance, of course. It is implied that it would be fine for Israel to murder every single “important” Hamas member, as long as it does so with more precision.

Abraham says as much in an interview with +972’s podcast, in Hebrew. The interviewer asks, “These people [marked by Lavender], we have no way of knowing if they’re really active in Hamas?” Abraham answers:

Before the war, Lavender was just an aid. It gave us a lead. Say, there was a phone call and someone on the call was in the Lavender database. Ok, that’s great, fine, so he’s a suspect. Maybe he’s in Hamas, maybe he’s not. Maybe he just has similar communication patterns to Hamas members. Journalists, for example, who regularly talk to Hamas members and attend Hamas rallies and take photos, they could have similar communication patterns.

It was “the madness of October 7,” Abraham says, that drastically changed things. The Israeli army apparently started taking Lavender’s recommendations as the truth, without further “verification,” despite knowing that Lavender has a “1 in 10” chance of mistakenly identifying someone as an “active Hamas member.”

Does Lavender Even Exist?

We can’t even be sure that Lavender exists as described by +972. Unlike in Edward Snowden’s disclosures of mass surveillance by the NSA and collaborating companies from 2013, Abraham provides no internal documents about the system. +972 claims to have obtained footage from a presentation on “human target machines” given by the Israeli military at Tel Aviv University, along with the presentation slides, but neither the footage nor the full slides have been released. Abraham’s article shows only two slides from the presentation, which lack details and don’t name any system. Even if Abraham had access to detailed documents, the Israeli military censor woulsdn’t have approved their publication (which is why Israeli outlets like +972 can’t do serious reporting on these topics).

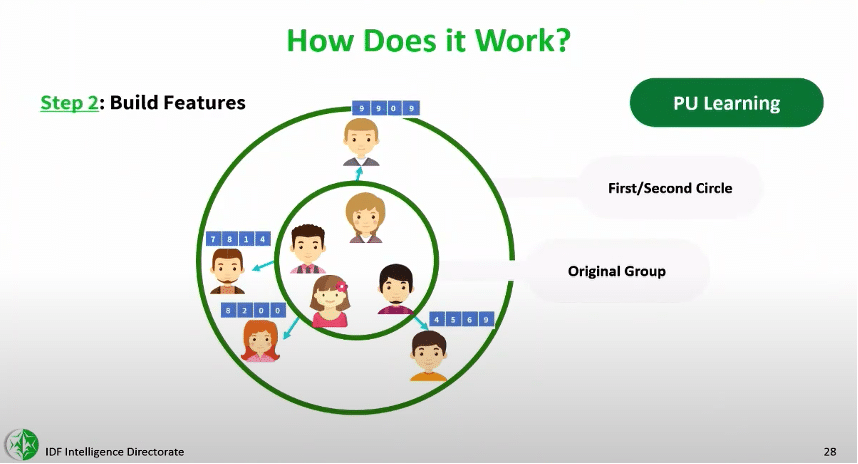

A slide obtained by +972 from a private presentation given by the Israeli military’s Unit 8200 at Tel-Aviv University’s “AI week.” The slide shows that the Israeli military surveils people by looking at characteristics of individuals in their inner and also outer circles. This is how spying works generally.

Even if Lavender does exist, it might as well be a list of the GPS coordinates of every structure in Gaza. The result would be no different from what we’re seeing: utter destruction. There is no super-machine in the Israeli army’s possession telling them what to bomb. Their compulsions and ideologies can’t be found in the computer.

The story that what is happening in Gaza is driven by a machine makes it look as if some Israeli soldiers are taking a moral stand. In an interview with Democracy Now!, Abraham said some of his informants were “shocked by committing atrocities and by being involved in things and killing families, and they felt it’s unjustifiable. And they felt a responsibility, I think.” He added that “they felt a need to share this information with the world, out of a sense that people are not getting it.” Why did their shock not stop them from obeying their genocidal orders?

Abraham doesn’t endorse such refusals in any case. When asked by Israeli media whether he thinks Israeli soldiers should refuse orders to expel Palestinians in Masafer Yatta from their homes (expulsions documented in No Other Land), Abraham replied: “It’s not my place to say. Everyone has to act according to their conscience…our emphasis isn’t on the individual soldier.”

Abraham’s informant soldiers seem to have no conscience. These soldiers aren’t acknowledging the genocide, let alone challenging it. They are merely raising questions about the protocol of “target generation” (seemingly without realizing that all of Gaza is the target). Abraham’s informants told him they used “dumb” bombs for many targets, which cause massive damage, over so-called smart bombs, which create more localized damage. Some of the soldiers justified this. “You don’t want to waste expensive [smart] bombs on unimportant people—it’s very expensive for the country and there’s a shortage [of those bombs],” one soldier told Abraham. Another similarly said, “it would be a shame to waste bombs” on Palestinians who aren’t important “Hamas operatives.”

Yet another informant told Abraham that the bombing of Gaza is unprecedented in scale, but that “I have much more trust in a statistical mechanism than a soldier who lost a friend two days ago. Everyone there, including me, lost people on October 7. The machine did it coldly. And that made it easier.” Whatever this is, it is not resistance to the genocide.

Israel’s official response to Lavender is actually closer to the truth than +972’s coverage. The Israeli military told Israeli media that the “dumb bombs” it has been dropping on Gaza are standard weapons of war. As for Lavender, the Israeli military said they “don’t use artificial intelligence to tell or predict whether a person is a terrorist,” adding that information databases are “just tools” for military analysts who make the decisions.

“AI” is a nebulous and shifting term, which has been used as a pretext for advancing imperialist and capitalist agendas.

“Artificial Intelligence” Is the Refuge of Scoundrels

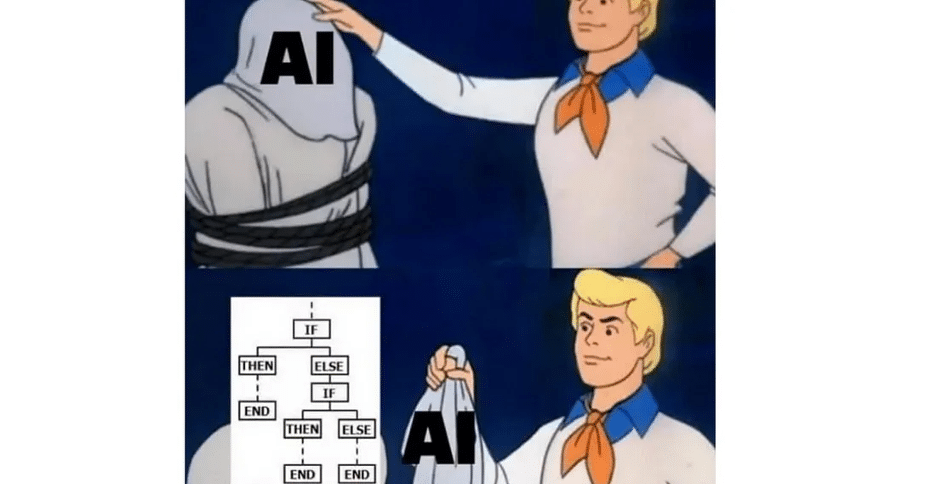

What nearly all discussions of AI miss is the malleability of the term. Systems that have been used for many decades have been rebranded as “AI.” Basically anything involving a computer can be “AI” in the right context, from systems that calculate insurance premiums to the simplest “if-then” statement. Calling something AI is mere marketing, putting a fresh gloss on old political agendas. The term “AI” is useful for oppressive institutions because it hooks into many ideologies about hierarchies of intelligence, labor, and the limits of the human mind.

If there’s an “algorithm” for what Israel is doing, it can be captured by a simple “if-then” statement: “If it’s necessary for Indigenous life, then try to destroy it.” This is the logic that settler-colonial states have been following long before digital computers existed. No need to invoke “AI.”

If we accept the premise of an “AI-powered genocide,” then the issue isn’t racist, Zionist ideology itself; we simply need experts to help us fix the computer, whose “decisions” were less than perfect.

Abraham’s reporting (co-authored with Harry Davies) on Israel’s supposed use of a ChatGPT-like system, published in both +972 and the Guardian, is a prime example. Abraham begins by describing the system in a way that sounds like PR for Israeli technologies: a tool created by the Israeli army’s “elite cyber warfare squad,” which is “being fed vast amounts of intelligence collected on the everyday lives of Palestinians living under occupation.” Yet there are downsides. Davies and Abraham’s expert on the matter is Brianna Rosen, a former White House national security advisor.

As expected, Rosen told the journalists that ChatGPT holds great potential for the state; that it could “detect threats humans might miss, even before they arise.” But there are “risks” of “drawing false connections and faulty conclusions.” Like many other servants of empire, Rosen says that it’s really AI that is now waging war, and she wants to improve its “transparency” and “accountability.” This is done in the name of “national security.” The questions Rosen wants us to focus on are:

What safeguards has Israel put in place to prevent errors, hallucination, misuse, and even corruption of AI-based targeting systems? Can Israeli officials fully explain how AI targeting outputs were generated? What level of confidence exists in the traceability and explainability of results?

Rosen concludes that it’s not clear how “responsible AI” is being practiced. One of her complaints is that Israel, which she describes as “a key U.S. ally,” hasn’t endorsed the principles of AI ethics that enlightened countries such as the United States have (she notes that Russia hasn’t, either). In 2020, for example, the Pentagon published an “Ethical Principles of AI” statement that includes “Responsibility,” “Traceability,” and “Equitable” use (which is promised to reduce “unintended bias”). If only Israel could be so ethical!

The experts discuss these weighty matters in gatherings such as the Responsible AI in the Military Domain Summit (2024) and the Microsoft-sponsored Global Conference on AI, Security and Ethics (2025) hosted by the United Nations. It’s safe to say that if Henry Kissinger (who rebranded himself as an “AI expert” late in life) were still alive, he’d be a keynote speaker at these “responsible AI for war” conferences.

Davies and Abraham give an example of the consequences when AI isn’t “responsible.” In their article on the Israeli military’s ChatGPT-like system, they refer to a case where “AI was likely used by intelligence officers to help select a target in an Israeli airstrike in Gaza in November 2023 that killed four people, including three teenage girls.”

“Killed four people”? It’s remarkable that this article, published March 6, 2025, neglects to mention that tens of thousands—and likely far more—Palestinians have already been killed by Israel in this genocide. Were there tens of thousands of these technical errors? Or are Israeli human beings enacting a genocide their leaders announced beforehand?

The loudest voices on AI take the same approach. AI Now, a think tank created by companies complicit in the genocide (Microsoft and Google) and alumni of the Obama White House, recycled the analysis of +972, crafting it to appeal to the US “national security” establishment. Heidy Khlaaf of AI Now (formerly a Microsoft employee) told Defense One this about the Israeli military’s use of “AI”:

the data is inaccurate and [the models] sort of attempt to find patterns that may not exist, like being in a WhatsApp group with a…Hamas member should not qualify you for [a death] sentence. But in this case, that is what we’re observing.

The implication here is that if a Hamas member were targeted, not just someone who’s in a WhatsApp group with one, then this would be acceptable; it wouldn’t count as a machine “error.” In this worldview, Americans and Israelis decide who lives and who dies in Gaza by right: they just need to do so responsibly.

A similar sleight of hand occurs when AI is invoked in other areas. When it comes to policing and prisons, for example, the experts are preoccupied with the “bias” of the “sentencing algorithm” (as if the US carceral state can be made “unbiased”). But the algorithm didn’t make the US incarcerate nearly two million people, significantly more than China and more than three times that of India, even as both countries have four times the US population. Instead of thinking about how to abolish this abhorrent, racist system, AI experts are here to make sure those two million are selected without undue bias.

Whether the discussion is about policing or genocide, this expert class offers the only solution it knows: forming more partnerships between the “stakeholders”—corporations, the state, academia, and NGOs—while promising “transparency,” “accountability,” and “bias reduction.” Fair, unbiased genocide and mass incarceration delivered by the most updated technologies.

“I See, I Shoot”

The myth of an automated, high-tech means of controlling Palestinians predates the current obsession with AI. For years, Israel has boasted about its weapons systems, presenting them as both impenetrable and nearly fully automated. A video from 2009 shows the Samson remote controlled weapon station, made by Rafael, in action along the Gaza colonial fence. The system is known in Hebrew as roah-yorah,” or “I see, I shoot.” The system’s female operators (the Hebrew name uses the feminine form of the verbs “see” and “shoot”) are in communication with a group of other female soldiers who monitor the colonial fence (the tatzpitanyiot, who were captured by the resistance in Al-Aqsa Flood). When they see movements of “Hamas militants” from afar, the video says, they shoot them down.

A video from 2009 of female Israeli soldiers operating the Samson “I See, I Shoot” system, made by Israeli weapons developer Rafael. In the video, the operator says: “I shoot the first [‘Hamas militant’]. I get confirmation from the [female] soldier [in Hebrew, tatzpitanit] who is monitoring that there is indeed a hit…I shoot the second. The third.” The video says that the story of this weapon system has “a feminist aspect to it, because initially the army called the system ‘I See, I Shoot’ using masculine verbs, but then it was decided that it will be operated by women soldiers.”

But there’s a big gap here between image and reality. In 2018, we saw how the nearly “automated” fence was made up of Israeli military snipers who were shooting with the intent to maim (or kill) Palestinians. And on October 7, 2023, we saw how the Palestinian resistance broke through and easily glided over the automated “killer AI” fence, even blowing up an “I See, I Shoot” station from the air.

Palestinian resistance fighters blowing up an “I See, I Shoot” station from a hang glider on October 7, 2023.

Microsoft and Israel: “A Marriage Made in Heaven”

The AI-centered stories still insist there’s a magical new technology that could enable Israel to do great harm—and that if only this technology were regulated, the problem would be solved. Once again, +972 set the terms of discussion, with an article by Yuval Abraham describing Microsoft’s contracts with Israel for advanced “AI” systems. This story and similar ones have been presented as “breaking news.” Are they?

It’s well-documented that Microsoft (like Google and other companies) has been providing services to Israel for decades. These include routine computing and data storage services, which are necessary for daily oppression and surveillance but have little to do with AI. Microsoft is also occupying land in ’48 Palestine (which includes a new data center opened in October 2023). It also relies on the Israeli military to supply its workforce. Former Microsoft CEO Steve Ballmer once said “Microsoft is as much an Israeli company as an American company,” while current Microsoft CEO Satya Nadella praised the “human capital” that the Zionist entity produces. As Benjamin Netanyahu summed it up, in a 2016 meeting with Nadella marking twenty-five years of collaboration: Microsoft and Israel are “a marriage made in heaven but recognized here on earth.”

“AI-Powered” Deportations?

We are now seeing another wave of AI propaganda surrounding the detention and deportation of students engaging in Palestine solidarity work. There are many stories about how the US government and Zionist groups are applying AI to flag people for deportation. Once again, the term “AI” is being used to dress up old political projects.

It has already been easy for decades to make lists of people from social media who match certain criteria—that’s what the platforms were built for. It is simple to search posts for keywords that the government might now deem as “pro-terror” (“resistance,” “settler-colonialism,” “BDS,” or whatever). The next step is to compile lists of people based on whether they’ve shared articles from certain sites (say, Mondoweiss or the Electronic Intifada) or activist groups (for example, Students for Justice in Palestine).

The Zionist startup that says it is employing “cutting-edge AI” to flag people could very well be doing these simple things, yet calling it “AI” makes the operation seem more powerful and sophisticated. This goes beyond a marketing agenda of overselling a technology by claiming it is better than it really is.

AI propaganda can also make us forget that social media platforms were designed to surveil and create profit. In their 2014 article on surveillance capitalism, John Bellamy Foster and Robert W. McChesney noted that an early application of ARPANET—a Pentagon network created in the late 1960s, which later became the internet—was to store millions of files the state collected on US citizens involved in anti-war movements (known as the Army Files). The digital platforms we have today grew from these efforts to surveil and control social movements, and were developed by the same institutions.

Focusing on the (mis)uses of “AI” regarding deportations also distracts from some important and difficult political questions, such as: Why do so many activist groups depend on platforms made by companies that serve US imperialism? How can we challenge these platforms and overcome decades of propaganda that said social media is a liberatory tool for grassroots struggles? What can be done about a culture of activism that, in the imperial core at least, is often more focused on creating images of resistance—thereby feeding the platforms of surveillance—than materially disrupting oppressive pipelines (as groups like Palestine Action have done)? And what could be done to reduce the harms of these digital platforms, now that they’re everywhere, and that so much information about activists is readily accessible?

Getting Off the Propaganda Train

Every so often, an executive from one of the tech monopolies tells the media he’s alarmed that AI is going to replace human beings. Generations of Hollywood blockbusters (2001: A Space Odyssey in 1968, Terminator in 1984, The Matrix in 1999, Avengers: The Age of Ultron in 2013, Subservience in 2024, and many more) have trained us to think of AI as something awe-inspiring and terrifying. Over the same time span, Israeli propagandists have put forward a similar image of the Israeli military: an invincible, all-knowing machine full of intelligence and technology.

These parallel propaganda trains—of AI and of Israel—have the same goals: puffing up the self-image of imperialists as gods, and inducing despair in those trying to resist genocide. In fact, as anticolonial rebels have shouted through the centuries, our oppressors have nothing that we don’t have. This genocide can be stopped. If it is, it will be stopped by human beings resisting, not by fixing a computer.